Abstraction, specifically, abstraction of the operating system (OS) and its services and, of course, the underlying hardware is a key element in the design and implementation of embedded systems. Abstraction is not new. After all, board support packages (BSPs) abstract board-specific elements for the OS, which in turn abstracts hardware: memory addresses, registers, etc. for services and applications, which, thanks to the OS, don’t have to be rebuilt as a new variant for every board on which they run.

An abstraction layer offers the following to the software in the layer above it, be that an OS, a service or an application:

- Reusability: facilitates redeployment of software above the abstraction layer across different hardware and software variants.

- Longevity: reduces the effort required to implement software on new hardware and new software.

- Speed of development: provides a known and stable environment for which new software can be rapidly designed and deployed.

- Separation and isolation: set boundaries between system components, isolating them and protecting them from each other.

Since the 2013 release of the Linux® Docker container platform, the merits and demerits of two abstraction models: containers and hypervisors for embedded systems, have been much discussed. A quick Internet search yields an interesting triangle of opinions. Two points of the triangle are inhabited by strident promoters of containers over hypervisors, and its mirror: equally strident proponents of hypervisors over containers. The third point, however, is becoming increasingly well-populated, and presents by far the most promising approach to the question. This position can be summarized in two words: it depends.

Less facetiously, containers and hypervisors are not opposing technologies, and presenting them as such makes no sense, neither technically, nor as a business strategy. They both have their uses. They are complementary technologies, and their relative benefits and drawbacks must be evaluated against the requirements of the overall systems for which they are proposed. It is against these requirements—both technical and business—that the relative appropriateness of one or the other depends. In fact, as we will see in many instances, the best solution may well involve both technologies.

We exclude machine simulators because these typically offer performance five to 1000 times slower than hardware, and are therefore generally unsuited for embedded systems.

What Is a Container?

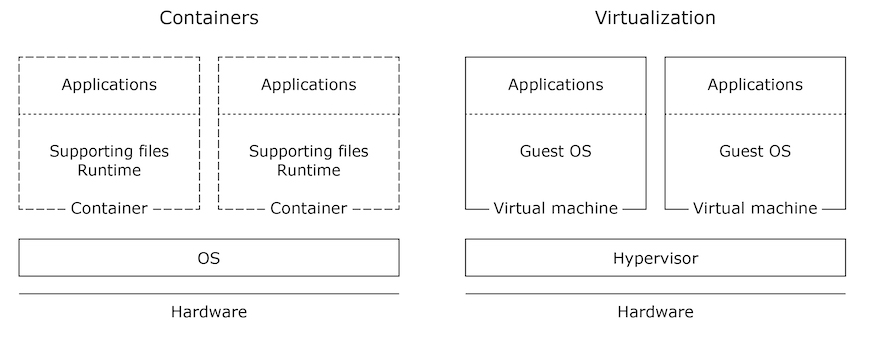

Containers are most commonly used with the Linux OS, though not exclusively so. They provide an abstraction layer between one or more processes (i.e., an application) and the OS on which they run. A container packages these processes and their underlying dependencies together so that they can be easily implemented on any OS that supports the container infrastructure.

For example, an application designed for one Linux release displays and uploads data from a medical device, say, a ventilator. For this it requires drivers for the hardware display on the ventilator. These drivers can be packaged in the container along with the application. If the application needs to be ported to another Linux distribution, say, for a different ventilator model or for an upgrade to an OS that runs on newer hardware, bringing the application over is simply a matter of adding it to the runtime image, as long as the new Linux distribution supports the container infrastructure.

Of course, there are limits to what a container can do. The application in the container along with its dependencies run on an OS, which in turn runs on a specific hardware architecture. The application must therefore be written for the OS and compiled for the underlying hardware architecture. The container isn’t an interpreter or simulator: the application packaged in the container uses the instruction set of the underlying system.

The steel shipping containers now ubiquitous in every port on the planet offer an imperfect but useful analogy. These containers are designed for quick and easy interchangeability. Where only 50 years ago ships often had long delays in port as longshoremen loaded disparate and discrete items, today container ships load and unload containers indifferently. Other than weight and special requirements such as refrigeration, one container is exactly like another. It will load on a ship, a railcar or a truck and continue to destination. Costly time in port is minimized, to the benefit of all save, perhaps, the ship’s crew, who have less time to enjoy life back on land.

If we think of our application as the contents being shipped, like the steel shipping container, the software container provides a uniform interface between the application and the vehicles in which it will run: the stays and cables and ties are all where the vehicle—in our case the OS—expects them to be. Moving from one OS to the other is simply a case of moving the box and making sure it is secure.

This analogy helps us understand how useful containers can be, as it can also help us understand their limitations. Containers are relatively lightweight; they are an excellent technology for moving software about and redeploying it. Since containers bring with them the bare essentials needed to run the software they are delivering, as well as facilitating deployment, they offer strict control over exactly what gets deployed.

However, unlike a shipping container, whose usefulness is spent once the cargo is delivered, a software container both facilitates integration of the software into a system and provides a modest level of protection at runtime, limited by the container security and safety model.

Android™ has implemented its own strategy for facilitating software redeployment and upgrades, rendering discussions of containers moot for Android systems. This specificity has no bearing on the usefulness of hypervisors for these systems; a hypervisor virtual machine can host an Android system in the same way and with the same conditions and limitations as it can host other OS systems.

What Is a Hypervisor?

Like a container, a hypervisor presents an abstraction of the layer below it to the software layer above. However, unlike a container, whose role is chiefly to facilitate the portability of discrete software components (i.e., services, applications), a hypervisor facilitates redeployment of entire systems, and separates and isolates these systems from each other and itself at runtime.

A hypervisor, also known as a virtual machine manager (VMM), abstracts hardware to present virtual machines. These virtual machines provide environments in which different operating systems and their applications can run. An OS and its applications in a hypervisor virtual machine are known as a guest.

These guests bring everything they need to run just as if they were running directly on hardware: services, device drivers and so on. The abstraction layer, in fact the virtual machine, that the hypervisor provides can be configured such that the guest doesn’t need to know that it is not running directly on hardware, on “bare metal”. A system running on bare metal can be redeployed unchanged to run in a virtual machine. This is not always the best strategy, as hypervisors can provide special software, such as paravirtualized devices, that leverage virtualization to optimize performance in a virtual machine.

Thus, like containers, a hypervisor provides a convenient, low-effort mechanism for redeploying software. They are not equivalent to containers, however; the table below presents key similarities and differences of these abstraction layers.

Container

|

Hypervisor

|

Abstraction layer for specific processes and their dependencies only.

|

Abstraction of underlying hardware (virtual machines).

|

Redeploy system components such as services and applications to multiple Linux distributions (distros).

|

Redeploy entire systems: OS, services, applications, etc. allowing consolidation of diverse systems (e.g., Linux, QNX® Neutrino® RTOS) on a single system on a chip (SoC).

|

Software in container integrated into underlying OS and runs on hardware.

|

Software in guest managed by hypervisor, but runs directly on hardware.

|

Containered software is integrated into underlying system during runtime, subject to the constraints of the container protection model.

|

Guest separated from other guests for deployment, and separated and isolated during runtime.

|

Containered software runs as part of OS system.

|

Guests run as separate, independent systems; hypervisor protects them and itself from outside interference.

|

Containered software can run on “bare metal”, or in a hypervisor virtual machine.

|

Guest systems can use containers, just as if they were running on “bare metal”.

|

Table 1. Comparison of containers and hypervisors

For more detailed information about how hypervisors work, see our Ultimate Guide to Hypervisors.

Understand the Requirements

A good way to clarify how hypervisors and containers can complement each other is to look at how they could apply to a relatively simple use-case. My colleague Michael Brown wrote an excellent introduction to scheduling in a QNX Neutrino OS system. In this post, he uses a fictitious medical ventilator to illustrate his explanation of scheduling techniques and how they interact. We will use the same fictitious medical device to illustrate how a hypervisor and containers can be used together.

To be clear, the system my colleague sketched out to run the ventilator would work perfectly well with neither a hypervisor nor containers. Neither are needed. However, as we will see, using a hypervisor and containers in our design and deployment strategy might bring some important benefits.

For simplicity, Brown set up his system with only three classes of task: critical, non-critical, and alarm, with, in his own words a crude classification of a task as critical or non-critical: if failure will immediately endanger the patient, the task is critical; all other tasks are non-critical. Alarm is a special case of critical. The table below lists these tasks as, Brown, classified them:

Task

|

Level

|

Manage air pressure

|

Critical

|

Manage oxygen mix

|

Critical

|

Communicate sensor data to processes that need them to perform tasks

|

Critical

|

Display breathing curve, pressure, oxygen mix, sensor data

|

Non-critical

|

Record breathing curve, pressure, oxygen mix, sensor data

|

Non-critical

|

Upload recorded data

|

Non-critical

|

Alarms

|

Alarm

|

Table 2. Software tasks in a fictional ventilator.

Brown’s requirements state that alarms should take precedence over all other tasks. This makes sense, because a failed or misbehaving critical task shouldn’t prevent an alarm being triggered. In fact, it might well be the reason we need to trigger an alarm.

To meet these requirements, we must build what is known as a mixed-criticality system. If we were building a real system, it would of course require approvals for market from the appropriate regulatory bodies (e.g., FDA in the US, MDD in the EU). Such approvals are in turn dependant on certifications to safety standards such as IEC 62304. In the case of our ventilator, we might need to certify the components performing the critical tasks to IEC 62304 Class C, while the components performing non-critical tasks might only require certification to a lower standard, or even none at all.

Considering that we are still struggling to save lives in the COVID-19 pandemic, we need to get our ventilator into hospitals as quickly as possible—while making sure it is safe. We are thus faced with a particularly urgent version of the standard competing requirements triangle: size (features), quality (performance and functional safety), time. Compromising quality is a non-starter: lives will depend on this device. We have reduced features to the minimum possible. To reduce the time required to get our ventilator to the COVID patients who will need it we employ a well-know strategy. We will:

- Reuse existing software components where possible.

- Separate and isolate to ensure that the safety-critical components are free from interference from non-safety components.

Reuse Existing Components

We have at hand a time-tested data display and upload system that has been running for years on what is now a rather old Linux distribution designed for an older Linux kernel. Unfortunately, we can’t just use this system as is, for starters because the hardware used for its GUI is no longer available. To be useful to us, then, this system needs some drivers updated to work with the currently available display technologies.

Thankfully, containers allow us to mix capabilities from different runtime environments. We can thus update the drivers and OS services we need, place them in containers and add them to the newer Linux system, confident that the upgrades include everything they need to work as intended.

Separate and Isolate Critical From Non-critical Components

With our non-critical components quickly in order thanks to containers, we can focus on the safety-critical components—though not quite yet.

First, because we want to run on the latest hardware available to us, we will use a modern hypervisor that can create virtual machines offering a clean separation between the legacy hardware we need for our ventilator and the newer resources found on our SoC. Our Linux guest will run oblivious to the fact that it is hosted in a virtual machine presented by a hypervisor running on a new SoC.

With our legacy Linux environment running in a container, we are free to modify our Linux guest and take advantage of new hardware drivers and para-virtualized drivers specific to our virtual environment. The legacy Linux applications are unaware of these updates and continue to run as we expect, but the container supporting environment is now optimized for efficiency and has the latest bug fixes in place. Note that this implementation could include running a 64-bit Linux guest with 32-bit container applications.

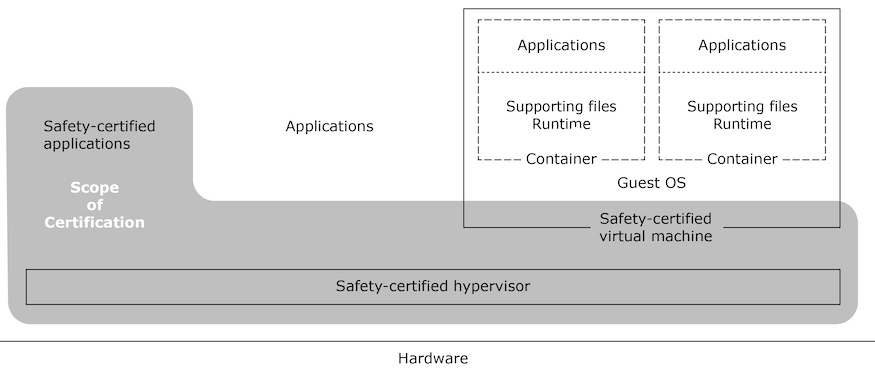

Second, our hypervisor allows us to separate and isolate safety-critical from non-critical systems. With the non-critical system isolated in a virtual machine, we can implement the safety components in their own system, either as a guest system in another virtual machine, or if the hypervisor supports such a configuration, directly at the layer of the hypervisor (“bare metal”).

The hypervisor allows us to nicely contain the diverse systems with the different safety requirements in virtual machines, so we can limit the scope of our safety-proving and certification efforts to just the safety-critical components, reducing the cost and, fundamental for our project, the time needed to complete them. If a safety-certified hypervisor with safety-certified virtual machines is available, the scope of our task is further reduced.

The figure below shows our implementation. The older Linux services along with the updated GUI applications running in containers run in our new Linux guest in a safety-certified virtual machine. The safety-critical components run at the level of the hypervisor. There is no reason to create another virtual machine for them. The virtual machine hosting the non-safety components ensures freedom from interference by these components.

Our fictitious medical ventilator offers just one illustration of how containers and hypervisors can be used together to help solve the persistent triangle of competing requirements. The strategy we have outline here can of course be adopted to any number of scenarios, not just to facilitate reuse of older code or systems and to speed product delivery, but also, for example, to facilitate upgrades and extend the useful life of products.