Imagine a hospital emergency room—never pleasant, even less so in 2021 with Covid-19 ravaging the planet. You believe you’ve broken your wrist; the triage nurse performed a preliminary exam and gave you something to help with the pain. It doesn’t. It’s been three hours. You’re still waiting, while others jump to the front of the queue: a six-year old in anaphylactic shock, a woman in labor, two heart attacks, a cyclist with head injuries.

In the ER, not all tasks are equally urgent. Your wrist hurts but the wait won’t kill you. For the six-year old whose airways are shutting, it’s a matter of minutes or less. Similarly, for an embedded software system running a critical system (e.g., a ventilator) some tasks are critical, some are not. For example, uploading statistical data to a server can be run as best-effort, but managing the air pressure and oxygen mix, and monitoring the sensors (e.g., oxygen saturation) are, literally, a matter of life and death.

In a real-time operating system (RTOS), the scheduler is like the triage nurse. It must determine how to use finite resources: doctors and nurses in the ER, CPU cores on the board. The scheduler makes sure all tasks in the system run, but in particular, like the triage nurse, it makes sure that critical tasks take precedence. This type of scheduler is a priority-based scheduler. A priority-based scheduler is designed to be deterministic. This means it isn’t designed to be fair; the most critical task can never be preempted unless it blocks (i.e., stops actively consuming CPU cycles), starving lower priority threads of CPU time. These lower-priority threads are like you with your broken wrist; they’ll eventually get their turn, but for now they’re not—well—the priority.

With the QNX Neutrino Real-time Operating System (RTOS) we can use a combination of the following to ensure that all tasks get an opportunity to run while also ensuring that critical tasks run and complete on time:

- scheduling priorities

- scheduling policies

- time partitioning (optional)

Threads

A thread is a sequence of instructions managed by the thread scheduler. Threads can have various states: blocked, stopped, ready and running. When the instructions in the thread are executing on a CPU, the thread is running. Threads from different processes may be required to complete a task, so when we configure partitions and scheduling priorities and policies, we need to think, not about the processes, which are just the code containers for the threads, but about the threads: how they work together and what they need so they can complete their tasks.

Scheduling Priorities

The scheduler uses priorities to determine which thread should run. Every thread is assigned a priority, which the scheduler checks when it is selecting the next thread to run. The higher the priority number, the higher the thread priority: a thread with priority 20 has precedence over a thread with priority 19. Thus, if more than one thread is ready to run, the scheduler selects the thread with the highest priority; this selection also takes processor affinity into account.

Processor affinity limits execution of specified threads to one or more specific cores; it can be used to reserve cores for specified threads and to reduce inefficient thread migration between cores. It can reduce overall processing throughput, however.

Scheduling Policies

A scheduling policy is the algorithm the thread scheduler uses to determine which ready thread to run. It only applies if multiple threads with the same priority are ready to run. If multiple threads with different priorities are ready, the scheduler makes its decision based on the thread priority, not on the scheduling policy. The QNX Neutrino RTOS supports first-in-first-out (FIFO), round-robin and sporadic scheduling.

If time partitions are used, thread scheduling policies are relative only to a partition; they are applied within a partition, but not between partitions.

Time Partitioning

Time partitioning distributes a defined period of CPU time (a periodic cycle) into logical divisions or partitions. Unlike the thread scheduling priorities and policies, which are POSIX standards and obligatory, the time partitioning implemented in QNX systems is a BlackBerry QNX innovation, and optional.

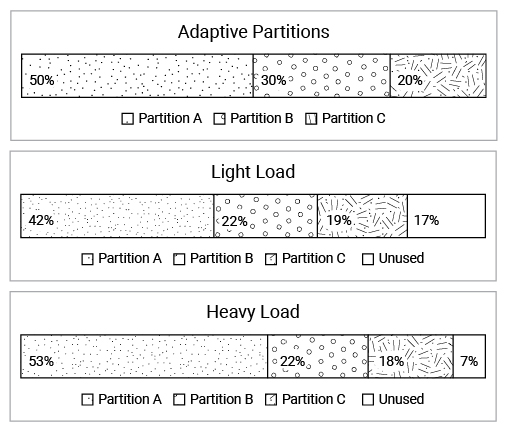

Partitions are made up of one or more threads. These threads don’t need to be from the same process. In fact, multithreaded processes may have each thread assigned to a different partition. Each partition is guaranteed a budget, a predetermined portion of the total CPU time on all CPU cores, and portions don’t need to be equal. For example, one partition might have 50% of CPU time, while two other partitions have 30% and 20% respectively. Any combination is possible, as long as the total doesn’t exceed 100%.

The QNX Neutrino RTOS (and its safety variant, QNX OS for Safety) support adaptive partitioning. Adaptive partitioning is time partitioning that can reallocate CPU time not used by a partition and make it available to other partitions, maximizing performance in resource-constrained systems.

For more information about adaptive partitioning, as well as scheduling priorities and policies, see the Ultimate Guide to Real-time Operating Systems (RTOS), and the QNX Neutrino RTOS System Architecture guide.

A Ventilator

A look at a greatly simplified system running a fictitious medical ventilator will help illustrate how in a QNX Neutrino RTOS system a priority-based scheduler selects the next thread to be run. Table 1 below presents the tasks our system looks after. Tasks are made up of the one or more threads required to complete the task; the threads required for any task may all belong to a single process or they may come from different processes.

Task

|

Level

|

Manage air pressure

|

Critical

|

Manage oxygen mix

|

Critical

|

Communicate sensor data to processes that need them to perform tasks

|

Critical

|

Display breathing curve, pressure, oxygen mix, sensor data

|

Non-critical

|

Record breathing curve, pressure, oxygen mix, sensor data

|

Non-critical

|

Upload recorded data

|

Non-critical

|

Alarms

|

Alarm

|

Table 1. Software tasks in a fictional ventilator

For simplicity, we have only three classes of task: critical, non-critical, and alarm. Our classification of a task as critical or non-critical is similarly crude: if failure will immediately endanger the patient, the task is critical; all other tasks are non-critical. Alarm is a special case of critical. Our requirements state that alarms should take precedence over all other tasks. This makes sense, because a failed or misbehaving critical task shouldn’t prevent an alarm being triggered. In fact, it might well be the reason we need to trigger an alarm.

Assuming that we have understood how our system should and does work, in particular, which threads look after what tasks, we can configure partitions and specify scheduling priorities and policies. We are running on a board with two CPU cores, which our modeling and our tests have shown to be sufficient.

Our Partitions

We group our threads into two partitions, as follows: Partition A (critical processes): 60% and Partition B (non-critical processes and alarms): 40%. Our ventilator design doesn’t in fact need partitions; thread scheduling priorities and policy are sufficient. We use partitions in our example, though, to illustrate how they can be used.

Critical tasks likely won’t need 60% of CPU cycles, but may need up to that much. Non-critical tasks may be a bit squeezed with only 40% of CPU cycles, but with adaptive partitioning they can tap into the 60% reserved for critical tasks, as long as this doesn’t jeopardize the critical tasks’ ability to meet their deadlines. If the cycles aren’t available, we can accept some latency and even some skips in the displaying, recording and uploading of data.

We put the alarm threads in the partition with the non-critical tasks because the alarms don’t need a lot of CPU time (40% is more than adequate), and in this partition the alarm threads won’t pre-empt threads for a critical task, only threads for non-critical tasks. If an alarm goes off, the threads for critical tasks keep running unless our design has specified that at an alarm these processes move to a design safe state (DSS) that requires them to halt.

Our Scheduling Priorities

For simplicity, we consider that all three critical tasks (air pressure, oxygen mix, sensor data) are equally important, so we set the priorities for their threads to 50. They share their partition equally.

We assign the threads for the non-critical tasks the following priorities: display and record: 40, and upload: 30, because uploading data is less time-sensitive than displaying current data to the medical practitioner caring for the patient. Since these threads are in their own partition, they won’t get bumped by threads for the high-priority critical tasks.

Finally, we give the alarm threads, which are in the same partition as the non-critical tasks, a priority of 60 so that they will always be first in line for a CPU core.

Our Scheduling Policy

We will use the round-robin scheduling algorithm. With round-robin scheduling, a thread stops execution when it consumes its time-slice (a specific number of OS timer ticks), allowing other threads of the same priority to run. We might also have used sporadic scheduling, which offers more precise control over thread behavior, but for our example round-robin is sufficient.

The scheduling policy only matters when multiple threads in the same partition and of the same priority are ready to run. Thus, in Partition A, because we have assigned the same priority to the threads for all three tasks, the round-robin policy governs scheduling. In Partition B, the policy applies when threads for display and record are in contention, but priority will dictate that upload threads cede, because we assigned them the lowest priority, as it will dictate that alarm threads always prevail, because they have the highest priority.

Below is our table of tasks to which we have added the partitions and priorities we specified for the threads looking after each task.

Task

|

Level

|

Partition

|

Priority

|

Manage air pressure

|

Critical

|

A

|

50

|

Manage oxygen mix

|

Critical

|

A

|

50

|

Communicate sensor data to processes that need them to perform tasks

|

Critical

|

A

|

50

|

Display breathing curve, pressure, oxygen mix, sensor data

|

Non‑critical

|

B

|

40

|

Record breathing curve, pressure, oxygen mix, sensor data

|

Non-critical

|

B

|

40

|

Upload recorded data

|

Non-critical

|

B

|

30

|

Alarms

|

Alarm

|

B

|

60

|

Table 2. Tasks with their partitions and priorities specified

What We Didn’t Discuss

Our example is a simple illustration of how adaptive partitions and scheduling priorities and policies can be used together in a QNX Neutrino RTOS system. It is necessarily incomplete. Relevant topics we haven’t discussed include priority inversion (a lower priority task indirectly pre-empting a higher priority task), critical thread priorities, critical budgets in adaptive partitions, sporadic scheduling, and processor affinity—or even how the number of CPU cores on our board might affect our design.

If you need to know about these topics as they apply to QNX Neutrino RTOS systems, please contact us, or stay tuned for future blog posts here.