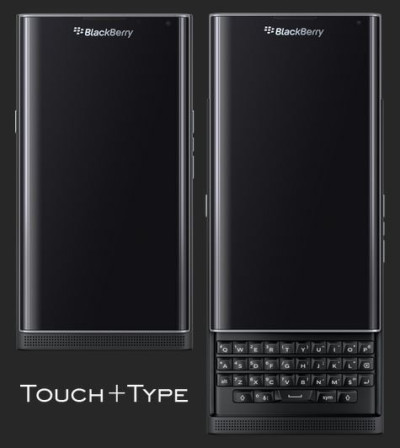

In this 2 part blog series we’re going to tell you everything you need to know to take full advantage of the keyboards available on the PRIV™ by BlackBerry®. In part 1 we covered topics pertaining to a device with both a physical and virtual keyboard. Part 2 will now cover how to work with the capacitive touch keyboard.

Capacitive Keyboard Integration

The new capacitive physical keyboard can be used like a touchpad to scroll web pages, flick predictive text onto the screen, enable the cursor, and for fine cursor control. The keyboard is capable of identifying up to 4 unique touch points. We’ve designed the keyboard to act similar to a touchpad. This will allow many applications to make use of this keyboard without requiring any code changings.

Scrolling Using the Physical Touch Keyboard

Without extra development, application UIs built with standard Android™ view containers such as ScrollView and HorizontalScrollView provide scrolling behavior for motion on the touch keyboard when not performing text input.

If you have custom scrollable views, to provide consistent scrolling behavior, you should ensure that your views handle mouse scroll wheel movement with either an external mouse connected to any Android device or external touchpad that behaves as a pointer device.

You should make sure that:

- Motion on the scrollwheel moves your view in the same direction as a ScrollView for vertical movement, or as a HorizontalScrollView for horizontal movement.

- You consider the magnitude of the events; if you move your view the same distance for any mouse scroll event without considering the magnitude, your view is likely to move too fast and not smoothly.

To more fully exploit the Priv keyboard in an application or in an input method editor (IME) for control beyond simple scrolling, it’s important to understand the full range of input events that this device can provide.

Identify the InputDevice

The touch keypad provides an InputDevice with a source of InputDevice.SOURCE_TOUCHPAD in addition to the keyboard device that provides key events. The resolution (density) is set to match the device display, which is convenient for gesture detection. If you want more low-level information on touch devices, please consult the Android developer guidance for touch devices.

The code below can be used to find and log the details of the device, since InputDevice.toString() provides formatted details. A complete working sample application that demonstrates capturing touch events from the physical keyboard on PRIV can be found here: CKBDemo

1 2 3 4 5 6 7 8 | final int deviceIds[] = InputDevice.getDeviceIds();for (final int id : deviceIds) { final InputDevice device = InputDevice.getDevice(id); if (device != null && ((device.getSources() & InputDevice.SOURCE_TOUCHPAD) == InputDevice.SOURCE_TOUCHPAD)) { android.util.Log.i("DeviceLogger", device); }} |

The output (with logcat prefixes removed) is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | Input Device 7: touch_keypad Descriptor: 954faadc99bb5a7c1d0537b923e0490c90b47e98 Generation: 137 Location: built-in Keyboard Type: none Has Vibrator: false Sources: 0x100008 ( touchpad ) AXIS_X: source=0x100008 min=0.0 max=1420.0 flat=0.0 fuzz=0.0 resolution=21.0 AXIS_Y: source=0x100008 min=0.0 max=609.0 flat=0.0 fuzz=0.0 resolution=21.0 AXIS_PRESSURE: source=0x100008 min=0.0 max=1.0 flat=0.0 fuzz=0.0 resolution=0.0 AXIS_SIZE: source=0x100008 min=0.0 max=1.0 flat=0.0 fuzz=0.0 resolution=0.0 AXIS_TOUCH_MAJOR: source=0x100008 min=0.0 max=1546.3961 flat=0.0 fuzz=0.0 resolution=0.0 AXIS_TOUCH_MINOR: source=0x100008 min=0.0 max=1546.3961 flat=0.0 fuzz=0.0 resolution=0.0 AXIS_TOOL_MAJOR: source=0x100008 min=0.0 max=1546.3961 flat=0.0 fuzz=0.0 resolution=0.0 AXIS_TOOL_MINOR: source=0x100008 min=0.0 max=1546.3961 flat=0.0 fuzz=0.0 resolution=0.0 |

Since the InputDevice.isExternal() method is hidden, applications can’t determine that the device is internal (built-in) without using reflection or some other indirect method. Note that the output above identifies the device location, but parsing the output of InputDevice.toString() to extract the location would be brittle, relying on formatting that has no guarantee of consistency between platform versions.

However, it may not be necessary to distinguish the internal device from external touchpad devices; you may wish to provide similar behavior for external touchpads connected to any Android device. In this case, you should consider the density of each MotionRange to provide some consistency of experience for gesture detection. Devices with the same source type are actually relatively rare; external touchpads frequently provide a source with the class SOURCE_CLASS_POINTER.

Events originating from the touch keyboard are MotionEvents with a source of SOURCE_TOUCHPAD and a device ID corresponding to the InputDevice described above. Priv supports up to 4 distinct touches on its physical keyboard, represented by separate pointer IDs. The touch event sequences for the physical keyboard are similar to a touch screen device, except that the source type of these touches are not directly associated with a display.

Event Flow

Beyond the InputDispatcher, a physical keyboard touch event follows this basic flow:

- If there’s an active IME, the IME can handle a keyboard touch event by overriding onGenericMotionEvent(MotionEvent). The default implementation:

- Returns true for events from the built-in touchpad if the IME is active, causing the event to be consumed with no action. This prevents the events from causing scrolling while typing, which could provide a negative experience.

- Returns false if the IME is not active.

- If the IME did not consume the event, the event is dispatched to the foreground Activity’s view hierarchy, and can be handled in an override of Activity.dispatchGenericMotionEvent(), View.dispatchGenericMotionEvent(), View.onGenericMotionEvent() or Activity.onGenericMotionEvent().

If implementing an IME which overrides onGenericMotionEvent(MotionEvent) to handle other events, the parent implementation should be called for any events not handled, in order to preserve this behavior.

If the BlackBerry® Keyboard is the active IME, it provides a range of keyboard gestures using the touch keypad.

Most existing Android applications and views don’t handle events with a source of SOURCE_TOUCHPAD. To allow the events to provide scrolling behavior in the widest possible range of applications, a fallback is provided: motion on the touch keypad, which is not handled by the app, is transformed into a stream of new MotionEvents that are the equivalent of scrolling on a mouse scrollwheel; these synthetic events are then dispatched to the application.

It’s very important to provide consistency in event handling. If you’re consuming some touchpad events to recognize gestures, you should typically consume all of them, whether a gesture is recognized or not, while your application is in a clearly defined state. Allowing only a subset of events to be handled by another component or to be converted to mouse scroll events will likely provide inconsistent behavior.

Mouse Scrolling Events

The synthetic events injected to the application where touchpad events are not handled are not a direct 1:1 conversion of each touchpad event. Instead, events are injected for small amounts of movement. The events have the following key characteristics:

- The source is InputDevice.SOURCE_MOUSE, which is a form of a pointer device (see SOURCE_CLASS_POINTER).

- Since there is no actual on-screen pointer, the “pointer” coordinates are also synthesized. You can retrieve these for a particular MotionEvent using getX() and getY(). The Y value is set to the middle of the display vertically, while the X value is set differently based on display orientation:

- When the device is held in portrait orientation, the X value matches the X-axis value of the touch down point on the touch keypad at the start of the motion. This takes advantage of the touch keypad matching the display in width and resolution.

- When the device is held in landscape orientation, the X value is set to the middle of the display horizontally.

- The magnitude of the motion in the vertical and horizontal directions can be retrieved using getAxisValue(MotionEvent.AXIS_VSCROLL) and getAxisValue(MotionEvent.AXIS_HSCROLL).

Pointer events are dispatched directly to the view under the pointer coordinates. This is not very significant in many apps, but if the layout contains multiple scrollable views, the events act on the view containing the coordinates synthesized as described above.

Using the Touchpad Events

As noted above, you can often get scrolling behavior in your app for free due to the fallback conversion to mouse scroll events. However, you can provide a richer input experience by handling the initial touchpad events directly, which enables:

- Tap (and double-tap) gestures.

- Touch-and-hold gestures.

- Making use of touch positions within the touch keypad.

- Multi-touch gestures (for example, pinching to zoom or differentiating scrolling using single or multiple fingers.)

Basic horizontal and vertical fling (swipe) gestures are still possible using the mouse scroll events, and might be used for inertial scrolling or paging, but if you want to differentiate where on the surface the fling occurs you may need more information. In addition, if you’re implementing an IME, the events are only available as touchpad events, since the fallback conversion to mouse scroll events occurs further along the pipeline.

Using the Android GestureDetector

Conveniently, the GestureDetector class works with touch events from a touchpad source, just as it does with events from the touch screen. The internal thresholds used by GestureDetector are configured based on the screen density, but since the resolution of the touch keypad is designed to match that of the built-in display, a sequence of events generated by motion with a particular speed and distance on the touchpad should cause the same callbacks to be invoked as the same motion on the touch screen.

Basic usage of the GestureDetector is demonstrated in the Activity code snippet below. In this instance, a very basic gesture listener pays attention only to fling and scroll events and only logs a few details. Note that the onGenericMotionEvent() method always returns true for touchpad events to ensure that other components don’t deal with a subset of them, and defers to the parent implementation for all other event types. In a practical listener:

- Fling gestures are often filtered with more strict thresholds appropriate to the particular purpose.

- Listener callbacks that return a boolean often return true only if an action was really triggered. GestureDetector.onTouchEvent()returns false if no callback was triggered for an event or if the callback returned false, and the event may sometimes be passed on to apply further tests, including potentially feeding it to a different gesture detector. Regardless, it’s still important to be consistent with the return values of onGenericMotionEvent(). If your component is in a state in which it is potentially recognizing gestures, it should almost certainly return true for all events, whether any particular event completed a gesture.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | public class TouchpadDemoActivity extends AppCompatActivity { private static final String TAG = "DemoActivity"; // Other class member variables go here private final GestureDetector mDetector; private final DemoGestureListener mListener; @Override protected void onCreate(Bundle savedInstanceState) { // other onCreate work goes here mListener = new DemoGestureListener(); mDetector = new GestureDetector(this, mListener); } // Other parts of Activity implementation go here @Override public boolean onGenericMotionEvent(MotionEvent event) { if ((event.getSource() & InputDevice.SOURCE_TOUCHPAD) == InputDevice.SOURCE_TOUCHPAD) { mDetector.onTouchEvent(event); // return code ignored return true; // Always consume event to provide consistency } return super.onGenericMotionEvent(event); // Make sure other events are handled properly. } private static final class DemoGestureListener extends GestureDetector.SimpleOnGestureListener { @Override public boolean onFling(MotionEvent e1, MotionEvent e2, float velocityX, float velocityY) { final float distanceX = e2.getX() - e1.getX(); final float distanceY = e2.getY() - e1.getY(); Log.i(TAG, "onFling distanceX=" + distanceX + ", distanceY=" + distanceY + ", velocityX=" + velocityX + ", velocityY=" + velocityY); return true; } @Override public boolean onScroll(MotionEvent e1, MotionEvent e2, float distanceX, float distanceY) { Log.i(TAG, "onScroll distanceX=" + distanceX + ", distanceY=" + distanceY + ", current pointer count=" + e2.getPointerCount()); return true; } }} |

GestureDetector provides onScroll() callbacks with more than one pointer ID (more than one finger touching) but the other callbacks won’t work with multiple touches. For multi-touch gesture support, you could explore the use of ScaleGestureDetector, which can be used in conjunction with GestureDetector. You may find it useful to explore the Android developer documentation on gesture handling.

If you already use GestureDetector for gestures on the touch screen, you may find it convenient to use the same listener for the same gestures on the touch keypad as well. If you do this, you should consider that GestureDetector does not consider the device ID, so if you use the same GestureDetector instance as well as the same listener, you should be careful about mixing touch event sequences from the two different input devices. If your shared listener is maintaining some state between gestures, you should also consider when that state might need to be considered stale if the device ID changes. You might encounter some odd gesture behavior if you’re not careful since gestures can be inappropriately recognized based on changes in coordinates between events from different surfaces. A couple of approaches to mitigate this include:

- Use a different GestureDetector

- Synthesize a MotionEvent with an action of ACTION_CANCEL when the device ID changes between real events, and pass that to the GestureDetector ahead of the event with the new device ID. The code fragment below demonstrates this approach, with the same test applied for events arriving from either onGenericMotionEvent() or onTouchEvent().

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | static final MotionEvent CANCEL_EVENT = MotionEvent.obtain(0, 0, MotionEvent.ACTION_CANCEL, 0, 0, 0, 0, 0, 0, 0, 0, 0); int mLastTouchDeviceId; /** * Utility method to wrap passing a touch event to the gesture detector, with * a cancel event injected if the device ID has changed from the last event. * This should be called consistently for any event source using the same * detector. * @param event The motion event being handled. * @return the return code from the gesture detector. */ private boolean checkTouchEventForGesture(final MotionEvent event) { final int id = event.getDeviceId(); // Note that this does inject a cancel on the very first event // from any device, which is harmless. if (mLastTouchDeviceId != id) { mDetector.onTouchEvent(CANCEL_EVENT); } mLastTouchDeviceId = id; return mDetector.onTouchEvent(event); } @Override public boolean onGenericMotionEvent(MotionEvent event) { if ((event.getSource() & InputDevice.SOURCE_TOUCHPAD) == InputDevice.SOURCE_TOUCHPAD) { checkTouchEventForGesture(event); // return code ignored return true; // Always consume event to provide consistency } return super.onGenericMotionEvent(event); // Make sure other events are handled properly. } @Override public boolean onTouchEvent(MotionEvent event) { checkTouchEventForGesture(event); // return code ignored return true; } |